Vehicle Detection and Tracking in Highway Video Footage

Detect vehicles and track them in a video! Using HOG features, an SVM and heatmap to find cars and track them in each frame of video.

This project focussed on detecting vehicles and tracking them in a video.

Training images of cars and non-cars features are extracted using Histogram of Oriented Gradients (HOG). I then train a model using Support Vector Machine (SVM) to classify images as car or non-car. Finally, a heat map of detections is used to draw the final bounding box on the image.

Vehicle Detection and Tracking

This project focussed on detecting vehicles and tracking them in a video. Training images of cars and non-cars are run through a Histogram of Oriented Gradients (HOG) feature extraction pipeline. I then train a model using Support Vector Machine (SVM) to classify images as car or non-car.

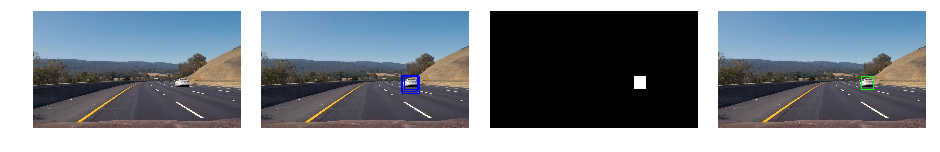

The sliding window technique is used to classify multiple sub-sections of a frame, then all of the potential detections are converted to a heat map. Finally, a bounding box of the car is drawn on the frame.

This is an overview of the vehicle tracking project that also included advanced lane finding. The combined result is very interesting to see.

Goal of the Project

- Perform a Histogram of Oriented Gradients (HOG) feature extraction on a labeled training set of images and train a Linear Support Vector Machine (SVM) classifier

- Apply a color transform and append binned color features, as well as histograms of color, to the HOG feature vector.

- Normalize your features and randomize a selection for training and testing.

- Implement a sliding-window technique and use the trained classifier to search for vehicles in images.

- Run pipeline on a video stream (testing with

test_video.mp4and later implement on fullproject_video.mp4) and create a heat map of recurring detections frame by frame - Use the heatmap to reject outliers and follow detected vehicles.

- Estimate a bounding box for vehicles detected.

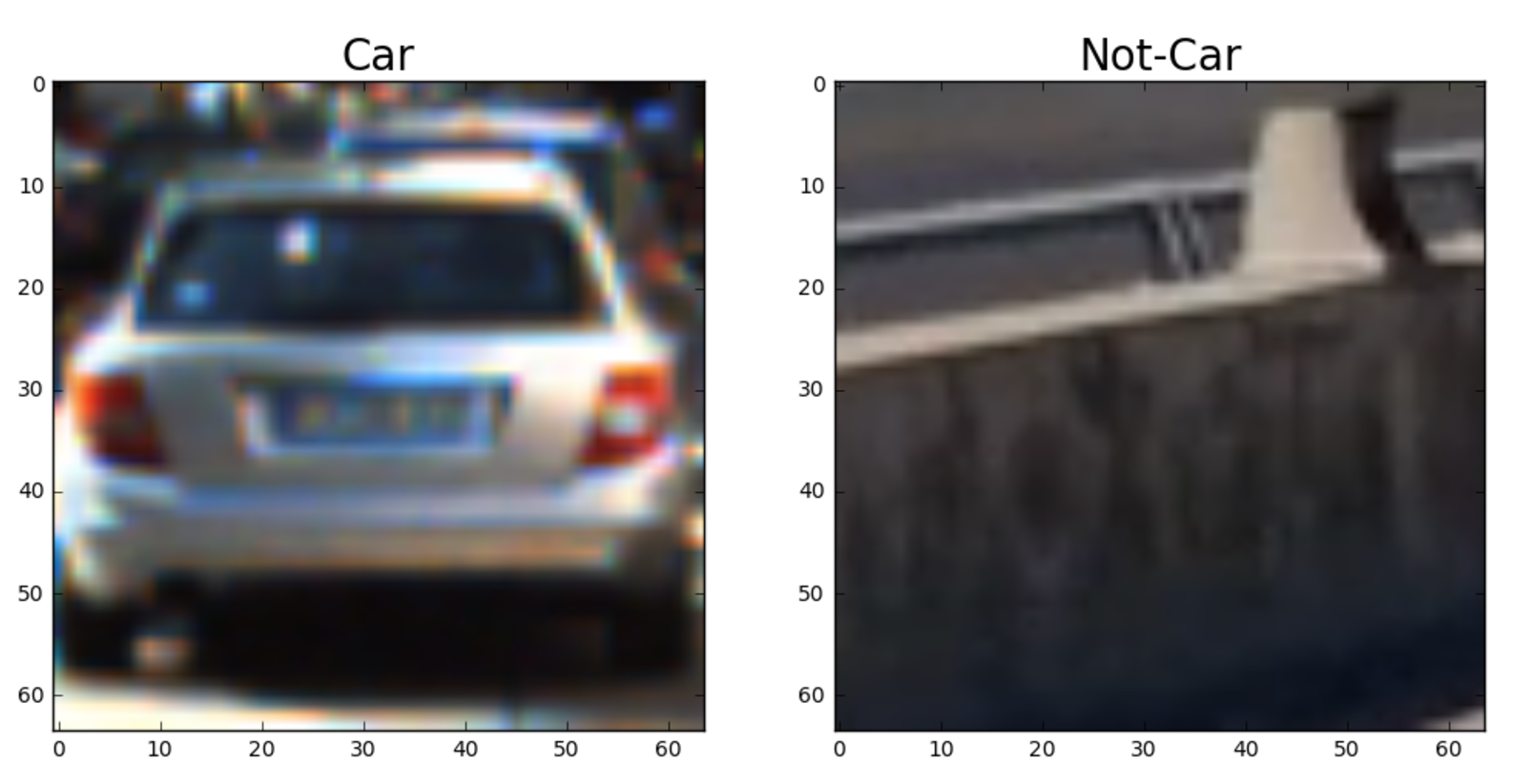

I started by reading in all the vehicle and non-vehicle images. Here is an example of one of each of the vehicle and non-vehicle classes:

I then explored different color spaces and different skimage.hog() parameters: orientations, pixels_per_cell, and cells_per_block.

I selected some random images from each of the two classes and displayed them to get a feel for what the skimage.hog() output looks like.

Histogram of Oriented Gradients (HOG)

The code for this step is contained in the second code cell of the IPython notebook under Utilities.

Here is an example using the YCrCb color space and HOG parameters of orientations=8, pixels_per_cell=(8, 8) and cells_per_block=(2, 2).

Choosing HOG Parameters

I tried many combinations of parameters and trained the classifier with each set and achieved 95%-98% accuracy during training. I found that using spatial and color histograms weren't adding any value to the classifier and were slow. I also found that YUV color space provided the best results (and least false positives during testing). I continued to tune just the HOG parameters and achieved good training results with the following:

| Parameter | Value |

|---|---|

| Color Space | YUV |

| Orient | 11 |

| Pix per Cell | 16 |

| Cell per Block | 2 |

| Hog Channels | ALL |

Training a Linear Support Vector Machine (SVM)

I trained a linear SVM purely on HOG features. After much testing, I didn't see any added value in spatial or color histograms for this project and they added a lot of overhead. However, they may be useful in different lighting conditions or road conditions.

Sliding Window Search

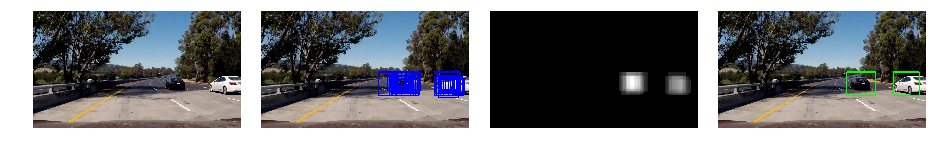

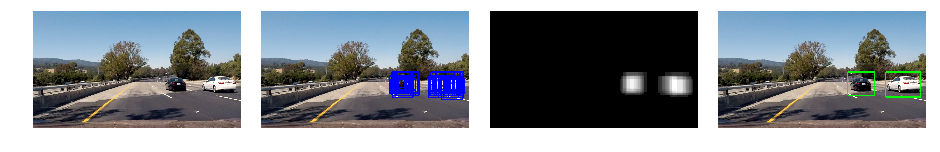

Searching the images was carried out by first cropping the search area to just the road. Then a HOG feature extraction is taken on the region once per frame. Within the region, HOG features are extracted from each window and run through the SVM predictor.

In order to improve the precision of the bounding box, I divided up the region from top to bottom, smaller windows were confined to the top of the region where cars would appear the smallest. The window size then increased moving towards the foreground where cars appear larger.

Ultimately I searched on 6 subregions and scales using YUV 3-channel HOG features. To reduce the jitter in the bounding box, I also keep track of the last set of detected bounding boxes. I use these to filter the next frame and increase the heat map region. Finally, I optimized the search to achieve nearly 2 frames per second during processing.

Video Implementation

Here is the final video implementation including lane line detection. The pipeline applies the advanced lane finding techniques and vehicle detection and tracking to each frame of the video.

Final Project Video with Lane Detection

Detection and False Positives

I recorded the positions of positive detections in each frame of the video. I also track and add the previously detected positions. From the positive detections, I created a heat map and then thresholded that map to identify vehicle positions. I then used scipy.ndimage.measurements.label() to identify individual blobs in the heat map. I constructed bounding boxes to cover the area of each blob detected.

Conclusions and Discussion

I tried many combinations of color spaces for HOG feature extraction and color histogram. I found that HOG worked well on the lightness L or Y channels. However, without spatial or color features, HOG on 1 channel resulted in many false positives. I tried different orients and pixels per cell for HOG extraction to find values that produced good training results but were general enough to avoid over-fitting.

Spatial and color histogram features weren't the focus of my adjustments, I found that they weren't adding any significant value to the classifier and just increased processing time. There are still some false positives that make it through and the bounding box is constantly adjusting. I did my best to reduce the jitter by banding the search region for each search window size and using previously detected regions to augment the heat map.

There is still much improvement that can be done to reduce the jittering of the bounding box. Overall, I think the classifier performed well and the search could be further optimized by augmenting the training dataset.

Check out the Project Github Repo to see the full implementation and code.

A. Khatib

A. Khatib